LMCache: Transforming Large Language Model Inference in the PyTorch Ecosystem

We’re thrilled to announce that LMCache has officially joined the PyTorch Ecosystem. This exciting addition enhances the open-source toolkit designed for advancing AI inference. For those interested in exploring the diverse PyTorch projects, the PyTorch Landscape showcases these innovative tools and details how projects can become part of this vibrant ecosystem.

Understanding the Challenges of Large Language Models

Running inference for large language models (LLMs) presents unique challenges. While inference requires fewer resources than training, escalating costs can become a pressing issue, especially as demand for quick response times and precise outputs increases. In projects where accuracy is paramount, finding efficient ways to manage resources without sacrificing performance becomes essential.

What is LMCache?

Developed following groundbreaking research from a team at the University of Chicago, LMCache rises to the occasion as a revolutionary Key-Value caching (KV caching) solution. This software extracts and stores kv caches generated by modern LLM engines like vLLM and SGLang, allowing for the sharing of these caches across various engines and queries.

How LMCache Enhances LLM Performance

LMCache offers a transformative interface for LLM engines by shifting from a model that processes individual tokens toward one that leverages KV caches as a robust storage and communication medium. Its architecture supports both cache offloading and prefill–decode (PD) disaggregation, allowing for more efficient cross-engine cache transfers.

Key Features of LMCache

The exceptional performance of LMCache can be attributed to several key features:

-

Optimized KV Cache Data Movement:

LMCache incorporates performance enhancements, including batched data movement operations and compute and I/O pipelining, significantly improving overall efficiency. -

Modular KV Cache Connector Component:

This feature allows LMCache to evolve rapidly alongside inference engines, providing flexibility in implementation. - First-Class Control API:

With capabilities such as pinning, lookup, cleanup, movement, and compression, the control API enables dynamic orchestration of caches across GPU, CPU, storage, and network layers.

The Architecture of LMCache

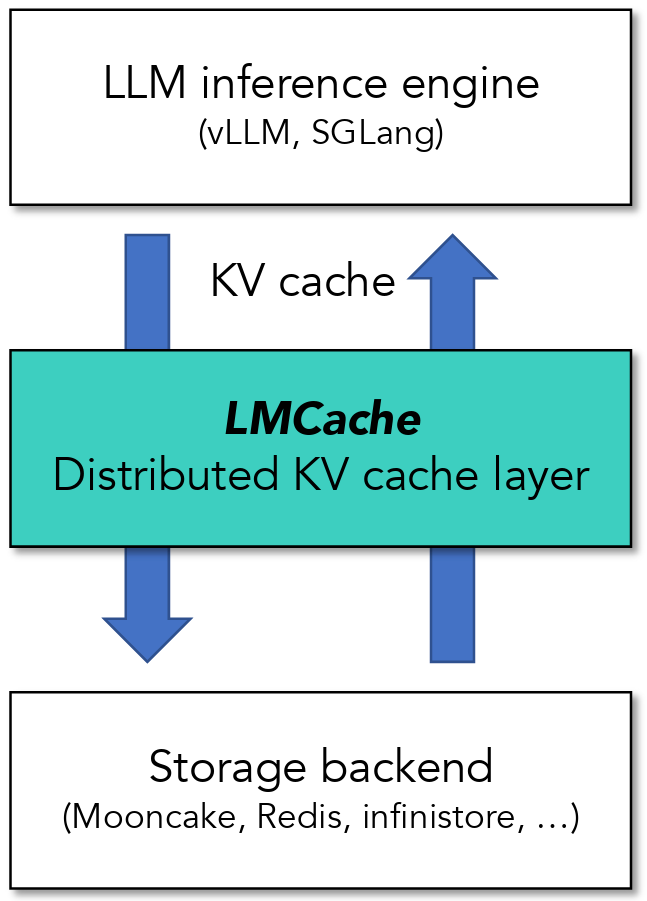

LMCache strategically positions itself between LLM inference engines and storage backends. This architecture is designed to streamline data flows, ensuring that caching occurs seamlessly without compromising speed or accuracy.

LMCache sits between LLM Inference Engines and storage backends.

Performance Metrics

Recent evaluations demonstrate that when combined with vLLM, LMCache achieves exceptional throughput improvements—up to 15 times in scenarios such as multi-round question answering, which is crucial for applications like chatbots, and for document analysis, including Retrieval-Augmented Generation (RAG). This level of efficiency positions LMCache as a vital tool for enterprises looking to optimize inference systems.

Rapid Adoption and Community Growth

The adoption of LMCache is swiftly gaining traction among enterprise systems, offering invaluable insights for future KV caching solutions. The source code is openly available on GitHub: LMCache GitHub Repository. This move fosters an engaged community willing to contribute and enhance the capabilities of LMCache further.

Getting Started with LMCache

If you’re utilizing vLLM as your preferred serving engine, setting up LMCache is straightforward. Use the following command to install:

bash

pip install lmcache vllm

vllm serve Qwen/Qwen3-4B-Instruct-2507

–kv-transfer-config ‘{

// Your configuration here

"kv_connector":"LMCacheConnectorV1", "kv_role":"kv_both"}’

With this simple setup, your LMCache-augmented vLLM server will be up and running, ready to enhance your LLM’s performance.

Learn More About LMCache

For those eager to dive deeper into LMCache, a wealth of resources is available for exploration. Whether you’re an enterprise user or a developer interested in the intricacies of KV caching, LMCache brings an array of functionalities tailored to meet diverse needs.

If you have questions or seek further discussion, we encourage you to join the conversation on LMCache’s Slack community. We’re excited to hear from you and explore how LMCache can elevate your AI projects!

Inspired by: Source