The Ernie open-source model family has been dormant for a while, but they are here to make it worth the wait. This latest release came out stealthily, but is braced to make a huge impact. With a “Thinking with images” mode in a model under 3B parameters, a lot is on offer. This article serves as a guide to ERNI-4.5-VL, testing it against the claims made about its performance during its release.

What is ERNIE-4.5-VL?

ERNIE-4.5-VL-28B-A3B-Thinking might be the longest model name in history, but what it offers more than makes up for it. Built upon the powerful ERNIE-4.5-VL-28B-A3B architecture, it represents a leap forward in multimodal reasoning capabilities. With a compact 3B active parameter count, it asserts better performance than competitors like Gemini-2.5-Pro and GPT-5-High across various benchmarks in document and chart understanding. A standout feature is its “Thinking with Images,” allowing users to zoom in and out of images to capture finer details.

How to Access ERNIE-4.5-VL?

The easiest way to access the model is through HuggingFace Spaces. Using the transformers library, you can use a boilerplate code similar to this:

from transformers import pipeline

model = pipeline("text-image", model="baidu/ERNIE-4.5-VL-28B-A3B-Thinking")

This allows you to harness the multimodal capabilities of the ERNIE model with ease.

Let’s Try ERNIE 4.5

To evaluate how well ERNIE-4.5-VL performs against its contemporaries like Gemini-2.5-Pro, it underwent testing on two key vision tasks:

- Object Detection

- Dense Image Understanding

These tasks were selected for their complexity, posing high challenges for models of the past. The evaluation was conducted on the HuggingFace Spaces interface: Click Here.

1. Object Detection

For the Object Detection task, the notorious finger problem was utilized:

Query: “How many fingers are there in the image?”

Response: The model struggled with this simple query, returning an inaccurate response. It failed to consider that a human hand may have more than five fingers, highlighting a significant limitation.

In a bid to see how Gemini-2.5-Pro would handle the same task, I conducted a similar test:

Unfortunately, Gemini-2.5-Pro also failed to provide the correct answer, mirroring the shortcomings seen in ERNIE.

2. Dense Image Understanding

Next, I tested a heavy image packed with details about money from different parts of the world, with dimensions of 12528 × 8352 and a size exceeding 7 MB. Dense images like this often pose a challenge for models.

Query: “What can you find from this image? Provide exact figures and details.”

Response: The model managed to identify several details accurately, although some discrepancies were noted in its recognition. Errors likely stemmed from OCR inaccuracies, but the ability to process dense content signifies considerable progress.

Comparatively, Gemini-2.5-Pro failed to convey any information from the same image, demonstrating ERNIE-4.5-VL’s prowess even with only 3 billion active parameters.

Performance Insights

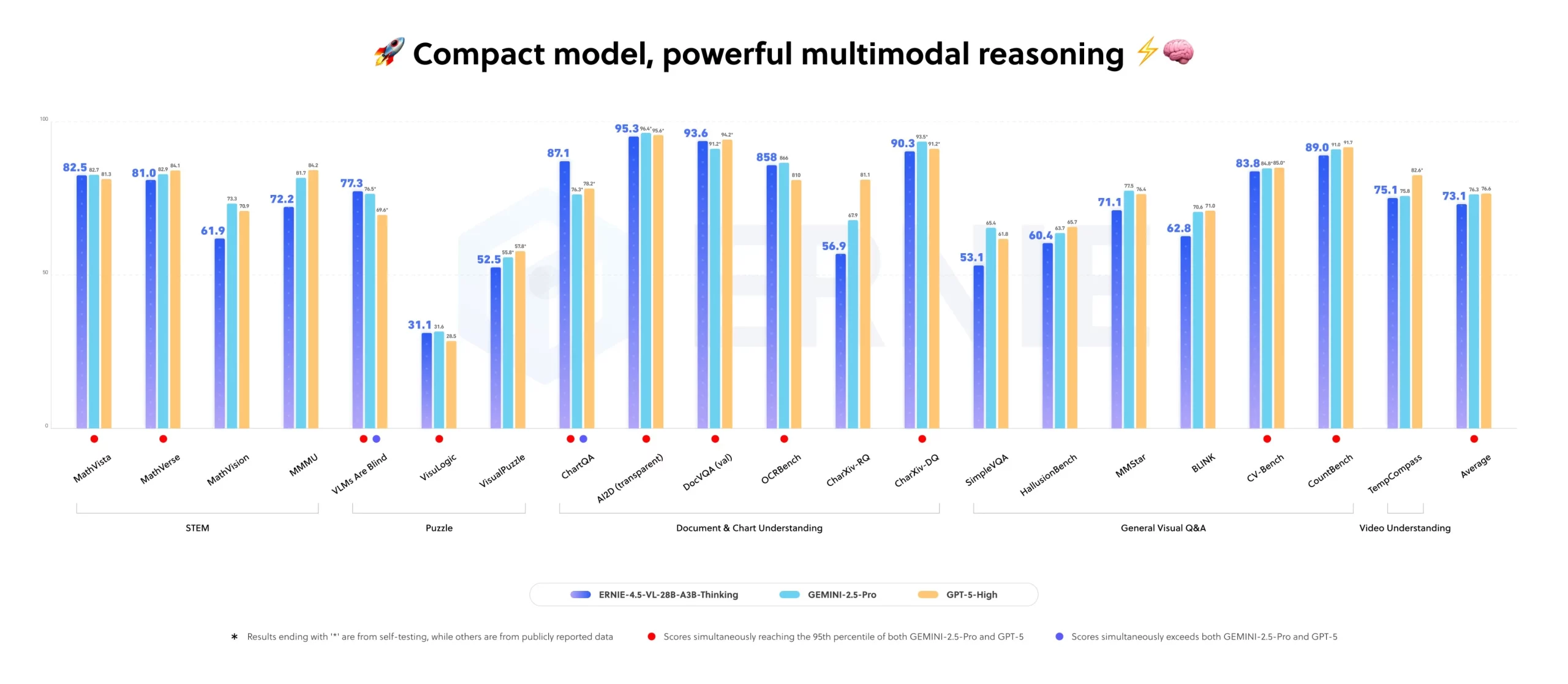

While personal testing provided valuable insights, official benchmark results can help clarify the model’s overall capabilities. As depicted below, ERNIE-4.5-VL showcases significantly improved performance, especially in chart QA tasks:

The benchmarks suggest that ERNIE-4.5-VL is indeed making strides in document and chart understanding, validating the company’s claims during its release announcement.

Frequently Asked Questions

A. It’s Baidu’s latest multimodal model with 3B active parameters, designed for advanced reasoning across text and images, outperforming models like Gemini-2.5-Pro in document and chart understanding.

A. Its “Thinking with Images” ability allows interactive zooming within images, helping it capture fine details and outperform larger models in dense visual reasoning.

A. Not necessarily. Many researchers now believe the future lies in optimizing architectures and efficiency rather than endlessly scaling parameter counts.

A. Because bigger models are costly, slow, and energy-intensive. Smarter training and parameter-efficient techniques deliver similar or better results with fewer resources.

Inspired by: Source